Digital Forensic Investigators and Examiners need to be able to find evidence quickly and one of the ways that ADF provides this capability is using PhotDNA.

ADF digital forensic software uses a variety of methods to locate and identify images. In the case of CSAM (Child Sexual Abuse Material) or CEM (Child Exploitation Material) allowing ADF let's investigators collect and analyze child sexual abuse images and evidence fast and filter out the images that are known so they don't have to be subjected to them again.

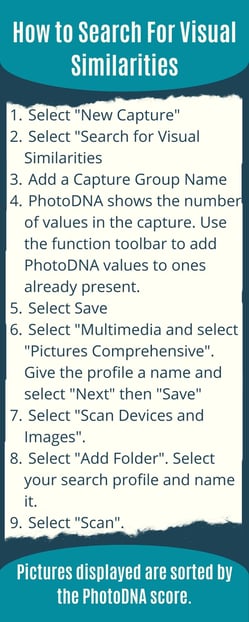

Searching for files by hash values, including Project VIC and CAID Data, photo and video classification capabilities, keyword searching, and photo probability, ADF also offers the ability to search for visual similarities using PhotDNA.

About PhotoDNA

Microsoft Digital Crimes Unit (DCU) partnered with the National Center for Missing and Exploited Children (NCMEC), Microsoft Research and Dartmouth College to create PhotoDNA in 2009 to create a method by which to identify these Images of CSAM and CEM.

Exploited Children (NCMEC), Microsoft Research and Dartmouth College to create PhotoDNA in 2009 to create a method by which to identify these Images of CSAM and CEM.

Although the method used is considered hashing it is different from the traditional method in the fact that PhotoDNA creates a signature that will not only find the exact image but will also locate and identify images that are visually similar. In this way PhotoDNA is actually looking at the visual content and not the file itself.

PhotoDNA Image Recognition

With traditional hashing methods, if a photo is resized or recolored, or the data is in any way manipulated, the hash will no longer match the manipulated image. With PhotoDNA copies of the images can still be reliably identified as well as the manipulated images, or those visually similar. PhotoDNA is not an object recognition technology and as mentioned above ADF incorporates an image classifier where pictures may be filtered by one or more of 11 visual classes.

Using PhotoDNA to Fight Child Sexual Abuse Material and Child Exploitation Material

ADF tools can identify, collect and automatically tag evidence by keyword and hash value. Further, ADF tools will automatically classify and filter to specific visual classes including Child Sexual Abuse Material, Pornography, and Upskirting. Photo probability assists in removing non-images, such as emojis and icons, and int the short video above, our Digital Forensic Investigator, uses weapons to illustrate how to search for images by visual similarities. Read more about ADF's digital forensic image recognition and classification.

You might also like: Meet Rich Brown from Project VIC International